Top 10 List of Week 05

-

Virtual Memory

Virtual memory is a memory management scheme where secondary storage can be used as if it were a part of the main memory. The main adavantage of this scheme is that programs can be larger than physical memory. Virtual memory is commonly implemented by demand paging. It can also be implemented in a segmentation system. Demand segmentation can also be used to provide virtual memory. -

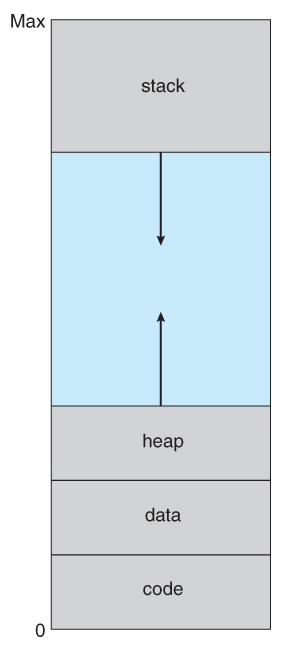

Virtual Address Space

Virtual Address Space (VAS) or address space is the set of ranges of virtual addresses that an operating system makes available to a process. The range of virtual addresses usually starts at a low address and can extend to the highest address allowed by the computer’s instruction set architecture and supported by the operating system’s pointer size implementation. The hole in the virtual address space is sparse. The hole can be filled when the stack and/or the heap grow. - Demand Paging

The basic idea behind demand paging is that when a process is swapped in, its pages are not swapped in all at once. Rather they are swapped in only when the process needs them(On demand). This is termed as lazy swapper, although a pager is a more accurate term. The pages that are not moved into the memory, are marked as invalid in the page table. For an invalid entry the rest of the table is empty. In case of pages that are loaded in the memory, they are marked as valid along with the information about where to find the swapped out page. if a page is needed that was not originally loaded up, then a page fault trap is generated, which must be handled in a series of steps:- The memory address which is requested by the process is first checked, to verify the request made by the process.

- If its found to be invalid, the process is terminated.

- In case the request by the process is valid, a free frame is located, possibly from a free-frame list, where the required page will be moved.

- A new operation is scheduled to move the necessary page from disk to the specified memory location. ( This will usually block the process on an I/O wait, allowing some other process to use the CPU in the meantime. )

- When the I/O operation is complete, the process’s page table is updated with the new frame number, and the invalid bit is changed to valid.

- The instruction that caused the page fault must now be restarted from the beginning.

-

Copy on Write

Copy-on-write or CoW is a technique to efficiently copy data resources in a computer system. The idea behind a copy-on-write is that when a parent process creates a child process then both of these processes initially will share the same pages in memory and these shared pages will be marked as copy-on-write which means that if any of these processes will try to modify the shared pages then only a copy of these pages will be created and the modifications will be done on the copy of pages by that process and thus not affecting the other process. - Page Replacement Algorithms

The page replacement algorithm decides which memory page is to be replaced. The process of replacement is sometimes called swap out or write to disk. Page replacement is done when the requested page is not found in the main memory (page fault). There are various page replacement algorithms. Each algorithm has a different method by which the pages can be replaced. Here are some of the page replacement algorithms:- Optimal Page Replacement algorithm this algorithms replaces the page which will not be referred for so long in future. Although it can not be practically implementable but it can be used as a benchmark. Other algorithms are compared to this in terms of optimality.

- Least recent used (LRU) page replacement algorithm this algorithm replaces the page which has not been referred for a long time. This algorithm is just opposite to the optimal page replacement algorithm. In this, we look at the past instead of staring at future.

- FIFO in this algorithm, a queue is maintained. The page which is assigned the frame first will be replaced first. In other words, the page which resides at the rare end of the queue will be replaced on the every page fault.

- Page-Buffering Algorithms

- Maintain a certain minimum number of free frames at all times. When a page-fault occurs, go ahead and allocate one of the free frames from the free list first, to get the requesting process up and running again as quickly as possible, and then select a victim page to write to disk and free up a frame as a second step.

- Keep a list of modified pages, and when the I/O system is otherwise idle, have it write these pages out to disk, and then clear the modify bits, thereby increasing the chance of finding a “clean” page for the next potential victim.

- Keep a pool of free frames, but remember what page was in it before it was made free. Since the data in the page is not actually cleared out when the page is freed, it can be made an active page again without having to load in any new data from disk. This is useful when an algorithm mistakenly replaces a page that in fact is needed again soon.

- Allocation of Frames

Frame allocation algorithms are used if we have multiple processes and it helps decide how many frames to allocate to each process. The two algorithms commonly used to allocate frames to a process are:- Equal allocation: In a system with x frames and y processes, each process gets equal number of frames, i.e. x/y.

- Proportional allocation: Frames are allocated to each process according to the process size. For a process pi of size si, the number of allocated frames is ai = (si/S)*m, where S is the sum of the sizes of all the processes and m is the number of frames in the system.

-

Thrashing

If page fault and swapping happening very frequently at higher rate, then operating system has to spend more time to swap these pages. This state is called thrashing. Because of this, CPU utilization is going to be reduced. -

Working Set Model

This model is based on locality. What locality is saying, the page used recently can be used again and also the pages which are nearby this page will also be used. Working set means set of pages in the most recent D time. The page which completed its D amount of time in working set automatically dropped from it. So accuracy of working set depends on D we have chosen. This working set model avoid thrashing while keeping the degree of multiprogramming as high as possible. - Allocating Kernel Memories

Two strategies for managing free memory that is assigned to kernel processes:- Buddy System

Buddy allocation system is an algorithm in which a larger memory block is divided into small parts to satisfy the request. This algorithm is used to give best fit. The two smaller parts of block are of equal size and called as buddies. In the same manner one of the two buddies will further divide into smaller parts until the request is fulfilled. Benefit of this technique is that the two buddies can combine to form the block of larger size according to the memory request. - Slab Allocation

A second strategy for allocating kernel memory is known as slab allocation. It eliminates fragmentation caused by allocations and deallocations. This method is used to retain allocated memory that contains a data object of a certain type for reuse upon subsequent allocations of objects of the same type. In slab allocation memory chunks suitable to fit data objects of certain type or size are preallocated. Cache does not free the space immediately after use although it keeps track of data which are required frequently so that whenever request is made the data will reach very fast.

- Buddy System